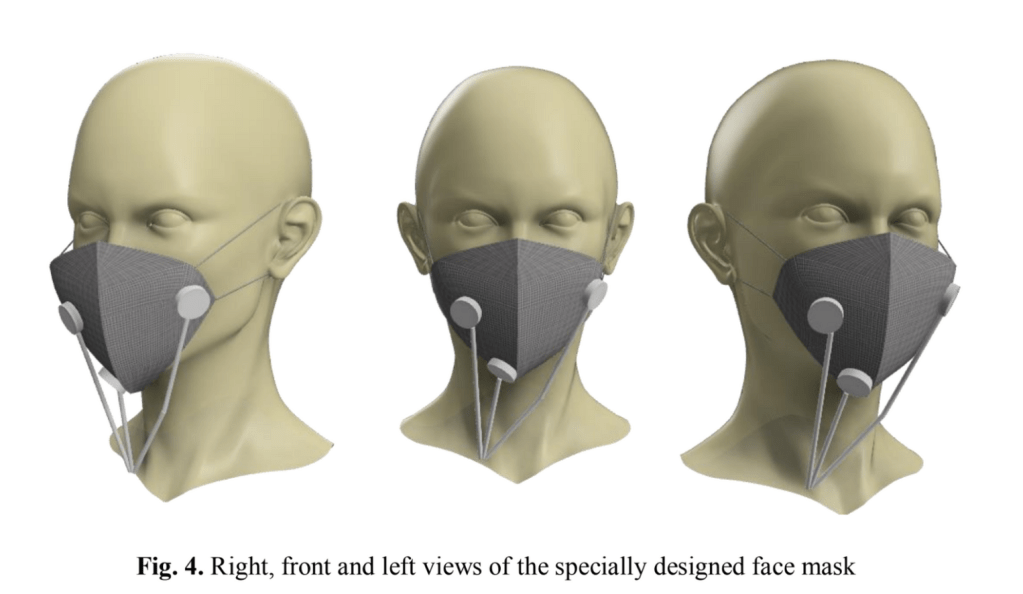

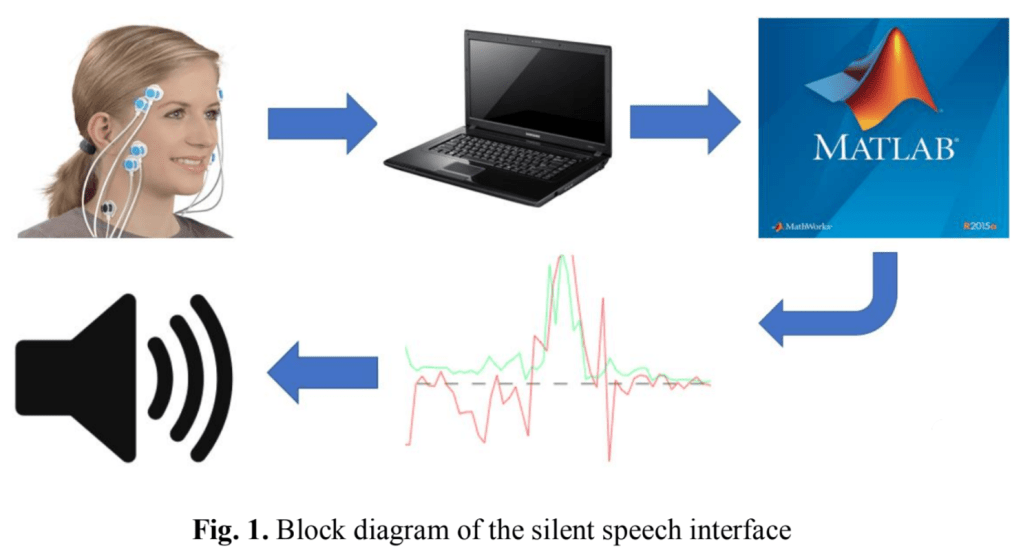

Silent speech or unvoiced speech can be interpreted by lip reading, which is difficult, or by using EMG (Electromyography) electrodes to convert the facial muscle movements into distinct signals. These signals are processed in MATLAB and matched to a predefined word by using Dynamic Time Warping algorithm. The identified word is converted to speech and can be used to control a nearby device such as a motorized wheelchair.

Challenge

Speech is the basic form of communication for humans. But there are millions of people around the world without the ability of speech. The reasons for this disability varies from one person to another. Though people with speech impairment make use of sign language to communicate with others, most of the population cannot communicate in sign language and this makes it tough for people with speech impairment to have even basic communications with others. Other than the usage of sign language, there aren’t a lot of technologies to help people with speech impairment to communicate with the masses.

Solution

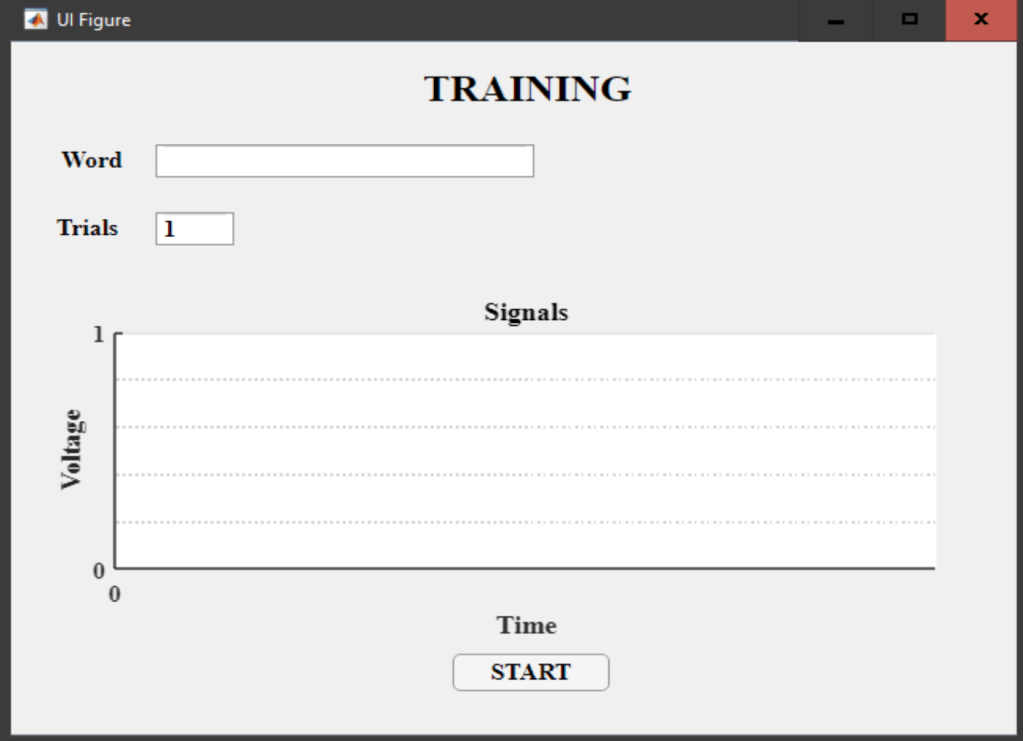

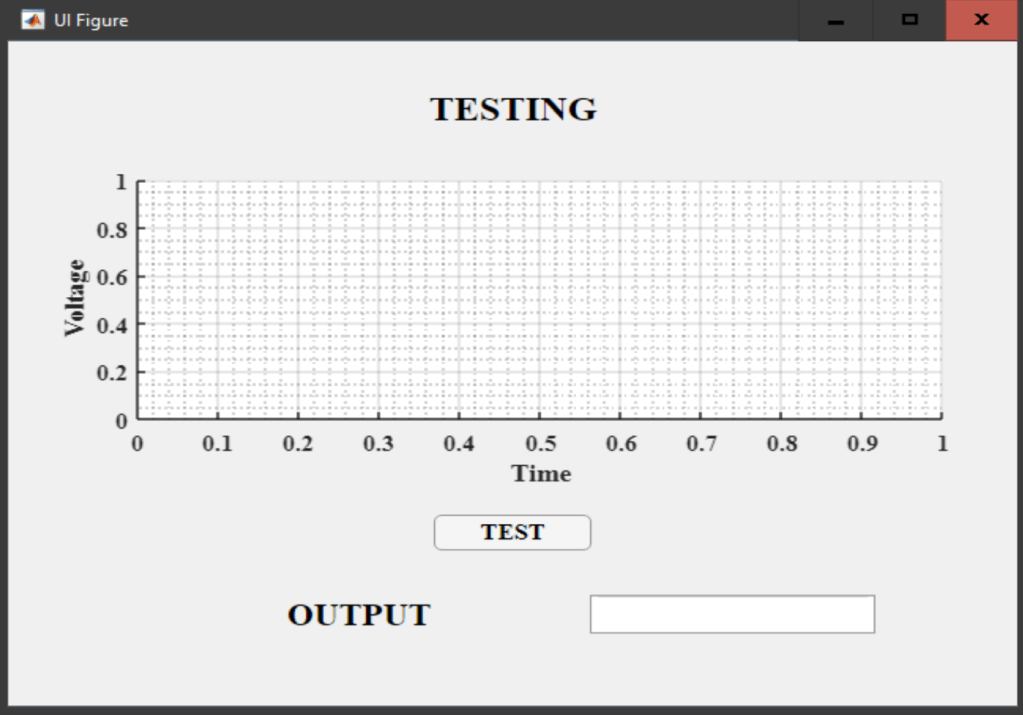

We are creating an interface that will help people with speech disabilities to communicate with others. E.M.G sensors attached to the person’s neck will read the electrical signals from the muscle movements and send them to the computer. At first, we record each person’s E.M.G readings while saying a word or an action. The readings for different words or actions are recorded. Then when the user says the word, the sensor reading is compared with the previously recorded readings with the use of MATLAB and when the input matches with one of the previously recorded readings, the corresponding output is given out from the speaker. The identified word is converted to speech and can be used to control a nearby device such as a motorized wheelchair. Thus, a silent speech interface has the potential to enable a differently abled person to interact with objects in their surroundings to ease their lives.

PUBLICATIONS

Paper Presented at REV2019 Cyber-physical Systems and Digital Twins

Read the complete paper: https://drive.google.com/file/d/1HM1cUpiiHTPBSIEI6nAqYSOEIwpGLDKP/view?usp=sharing

Recognition

Secured 2nd best research paper award for ”Silent Speech Recognition” in National Conference and Projethon on Science, Engineering and Management-2019 held at The Oxford College of Engineering, Bangalore.

Was One of the finalists, Presenting our project ”Silent Speech Recognition for the differently abled” in

Pravega Innovation Summit conducted at Indian Institute of Science, Bangalore.

Contributors:

- Josh Elias Joy

- Ajay Yadukrishnan

- Poojith V

- Prathap J